Strategies of load balancing and scaling an API gateway to handle high traffic volumes

API gateway load balancing is a technique that efficiently distributes incoming network traffic to multiple backend endpoints, also known as the server pool. This technique is vital in the modern internet landscape, where a typical website handles thousands, if not millions, of concurrent client requests.

You can optimize API integration load balancing by employing several strategies, including monitoring performance, installing a load balancer, caching data, auto-scaling, and horizontal scaling. But what do these strategies entail? This article explores the best practices and tips for load balancing and scaling a private or open source API gateway.

Understanding the Load on an API Gateway

The load of your API integration software is the amount of inbound traffic to your backend servers. A load balancer routes this traffic across all servers to guarantee high performance and loading speeds, ensuring that none is overwhelmed. Moreover, load balancing ensures high uptime and reliability by routing client requests to online servers.

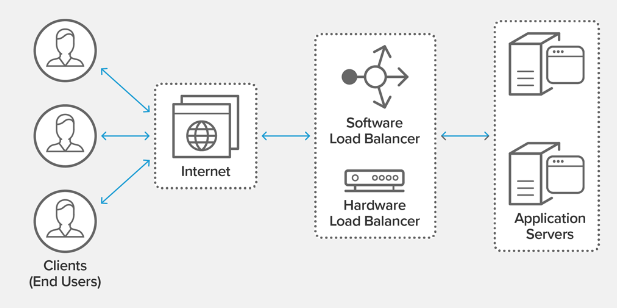

Image Source: API load balancing

Key metrics to have in mind when monitoring the load performance of private APIs or open source gateways include:

Latency

Latency is a metric that gauges the time your API gateway takes to process and respond to client requests. The higher the latency, the more time you might need to fix the performance issues in your integration.

Request count

Monitoring the number of requests directed to your edge microservices can help you understand the API load and what you can do to optimize it to handle the traffic.

Error status codes

A fully-functional API gateway displays error codes 4XX and 5XX for client and server glitches. You can track how frequently these codes are generated to identify and troubleshoot errors in your edge microservices.

Integration latency

You can monitor the latency of your integrations to identify and troubleshoot glitches in API gateway microservices.

Cache hit rate

If your private API gateway is enabled to cache client requests in a secondary database, tracking the cache hit rate can help determine if the load to the backend is reduced as intended.

But how do you measure the performance of these metrics? Here are some popular tools and techniques to get you started:

- Amazon CloudWatch: a cloud-based service tool that tracks and notifies you when set thresholds are surpassed in latency, request count, and error rate metrics.

- Load testing tools: cutting-edge load testing tools like Gatling, Artillery, and Apache JMeter can simulate traffic loads to help you identify performance glitches in your private API gateway.

- AWS X-Ray: a paid service that enables clients to monitor and debug distributed applications running on AWS infrastructure. The service can point you to the errors that hinder your edge microservices and AWS services.

- Third-party monitoring tools: external monitoring tools like AppDynamics, New Relic, and Datadog can help you track and understand API gateway performance metrics.

Load Balancing Strategies for an API Gateway

Load balancers ensure you get more than API gateway security by routing voluminous requests to distributed servers, enhancing the integration’s overall performance and scalability. Technically, this approach improves the availability of your databases, web applications, or any other computing resource in the backend. In return, customers enjoy a smooth user experience in the front end.

Prevalent load balancing strategies for integrated solutions like Tyk Gateway include the following:

Least connections load balancing

This effective load-balancing strategy dispenses inbound traffic to the backend servers with fewer connections. This ensures that the load goes where it can be handled optimally.

Round-robin load balancing

The round-robin strategy includes routing inbound traffic to backend servers in a curricular sequence. This approach distributes requests equally, ensuring no server is overwhelmed.

Weighted load balancing

This strategy entails assigning varying weights to your backend servers based on capacity or performance, ensuring that greater incoming traffic is routed to the more powerful servers.

IP Hash load balancing

As the name suggests, the strategy leverages the client’s IP address to ensure users’ requests are always routed to the correct backend server for seamless client session management.

Hybrid load balancing

The hybrid approach involves deploying multiple load-balancing algorithms to enhance performance and reliability. For instance, you can leverage IP Hash load balancing to optimize higher load capacities and round-robin load balancing to balance low-traffic sessions.

Scaling strategies for an API gateway

Scaling refers to optimizing your API gateway integration and integrating it with extra resources, such as servers or instances, to enhance its capabilities for handling more traffic and availability demand. Scaling your API gateway open source resources comes with various business benefits, including:

- Enhanced performance: distributing inbound traffic to multiple instances or servers, ensuring each handles a smaller load for faster responses.

- Greater availability: integrating more resources with your micro edge services ensures the system can handle more incoming traffic without downtime.

- Flexibility and agility: optimizing computing resources by adding or removing them as much as needed enhances an organization’s flexibility and agility to adapt to shifting demand patterns.

- Cost optimization: scaling can help optimize your overall API gateway cost by enabling high-volume traffic handling functionalities without investing in new hardware or IT infrastructure.

With these benefits in mind, here are some strategies that can help you meet your edge microservices scaling needs:

Horizontal scaling

This strategy entails integrating your edge microservices with additional servers or instances to enhance capacity, especially when the client’s needs cannot be met with one server or instance.

Vertical scaling

Vertical scaling is an ideal strategy when optimizing the capacity of a single instance or server to handle high-demand traffic, especially if you don’t need additional server resources.

Auto-scaling

As the name suggests, this strategy leverages predefined criteria to automatically add or remove computational resources from the system to match the preceding demand. It’s a go-to option if you want to cut costs.

Cloud-Based Solutions for an API Gateway Load Balancing and Scaling

Besides these strategies, CTOs can also leverage cloud-based scaling solutions for API gateway custom domains. Unlike other solutions, such as monitoring, cloud-based scaling options are inherently secure and come with various cyber-proof functionalities, such as firewalls and encryption, to shield your integration against breaches.

Moreover, cloud-based scaling solutions offer greater availability and ease of use versus their on-premise counterparts. Common examples of these solutions include the following:

- Amazon API Gateway: a managed IT service that offers in-built scaling and load balancing functionalities to optimize your system for improved uptime and performance.

- Tyk: a fully-managed cloud-based API Management solution that is powerful, flexible and highly scalable, which makes it easy for developers to create, secure, publish and maintain APIs at any scale, anywhere in the world.

- Google Cloud endpoints: another managed IT service that lets clients build and deploy edge microservices in the cloud. It comes with in-built load balancing and auto-scaling features.

- Microsoft Azure API Management: a managed service by Amazon that enables users to optimize their edge microservices with in-built scaling and load balancing features to handle high inbound traffic.

- Kong: a cloud-centric open-source API gateway that also features in-built auto-scaling and load-balancing features for deploying high-performance integrations.

Here is how to implement a cloud-based solution for your API integration:

- Select a reputable cloud service provider

- Create an API gateway and define its authentication or authorization protocols

- Set up scaling and load-balancing functionalities

- Tweak the functional policies as much as needed to achieve an optimal performance

- Test and deploy the solution to production

The load balancing strategies listed in this guide, such as IP hash, round-robin, and hybrid, will help you optimize your API gateway to enhance availability and faster responses to user requests. Similarly, you can perform vertical, horizontal, or automatic scaling to boost performance.